Meta’s AudioCraft AI Creates Music and Sound Effects From Text Inputs

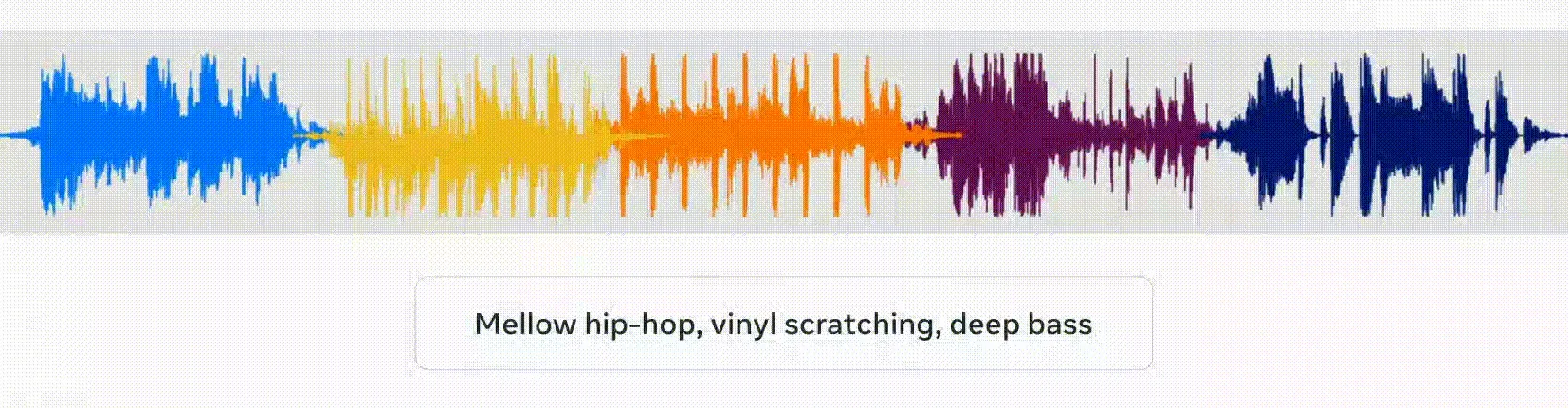

Meta has developed a groundbreaking tool called AudioCraft, which utilizes AI technology to produce tailor-made soundtracks for various projects. This innovative solution eliminates the need for extensive time and financial resources typically associated with the creation of original soundtracks. By simply providing a few prompts, users can generate high-quality and lifelike audio and music from text, as promised by Meta.

Meta notes that while AI-generated visuals have taken off – AI-generated audio still lags behind, but the new AudioCraft family of tools allows users to create “music, audio, compression and generation – all in one place. “

The tool is divided into three models: AudioGen, MusicGen and EnCodec. AudioGen creates audio from text prompts based on public sound effects, while MusicGen does the same thing but with music licensed by Meta. On the other hand, the EnCodec decoder enables the creation of higher quality music with fewer artifacts.

Users can create a range of sound effects

Meta also claims to release pre-trained AudioGen models that allow users to create ambient sounds and sound effects – such as a dog barking, car sounds or footsteps on wooden floors.

“The AudioCraft family of models are capable of producing long-lasting, high-quality sound and are easy to use,” said Meta.

“With AudioCraft, we’re simplifying the overall design of audio generative models compared to previous work in the field – giving people the perfect recipe to play with existing models that Meta has developed over the last several years, while also allowing them to push the boundaries and develop their own,” it added.

Meta has made open source AudioCraft tool family templates

Meta is also open-sourcing its audio production tools, such as Llama 2 LLM, so that researchers and developers can create their own models. Meta claims that by open-sourcing the models, it can help advance AI-generated audio.